The wise work manager for context-based scoping

It's a long-standing rule of thumb that we should not use java.lang.Thread within a Java EE server's servlet or Enterprise JavaBeans (EJB) container. The reason is that a managed environment should have complete visibility over all spawned threads; when users create their own threads, visibility goes by the wayside. In this article we explain, instead of creating new threads for certain tasks, how to reuse threads safely in a managed environment, conducting some time-consuming tasks in parallel, as well as increasing the response time and throughput. To achieve these goals, we use the Work Manager for Application Servers Specification, which provides an application-server-supported facility for concurrent execution. We describe how to convert a startup servlet efficiently to do tasks in parallel, how to do audit-trailing without using Java Message Service and how to change some time-consuming API calls to fast, asynchronous invocations.

The code and examples shared in this article successfully run in IBM WebSphere Application Server 6.0.2. The code can be reused with minor changes, however, in other environments that support the Work Manager API. Please refer to relevant application server resources.

The problem

Suppose we have some startup servlets in an existing Java EE application. There are 13 different modules in the complete ear file, which is a mix of war files (Web projects) and jar files (client or utility files). The existing startup servlet of one module takes about 6 minutes, because the application caches a lot of data from the database, loads and instantiates some classes from an XML configuration file, and so forth. Each Web module has a corresponding startup servlet; two of those servlets each take 10 minutes to start and then rest 30 seconds; this happens sequentially, depending on the order in which the application server loads each Web module. Even worse, when the ear deploys in a clustered environment of 10 servers, the total startup time is 200 minutes, taking into account the sequential startup of the different cluster nodes.

Obviously, we need a performance boost. Our solution is to use the Work Manager API, which eventually reduces the startup time to less than a minute.

Introducing the Work Manager API

In considering how to solve our problem, we first think about the sequential loading of the startup servlets of the individual Web modules in the ear file. If we had a mechanism to run these startup servlets in parallel, the first phase of the solution would reduce the overall startup time to 6 to 7 minutes. (This assumes all startup servlets perform independent actions. We will explain how to handle dependent modules later.)

You may be considering spawning threads in each startup. However, we already have mentioned that the Java EE specification does not advocate using our own threads within a container. Then can we use a single thread? There are two problems with this approach:

1. You are not supposed to create a thread within a container.

2. Even if you create a thread, how will you be able to pass the individual module-specific metadata (that is, the classpath information, the transactional information, the security, and so forth) to that single thread? That's impossible.

The Work Manager API can help out with this situation, freeing us from creating our own threads and giving us the facility to pass context-sensitive information. If you tell a work manager to do something, you can assume the container will perform the work as if the work manager is sitting within your current Web context.

A work manager starts a parallel daemon worker thread along with the application server. You configure the work manager through the administration console and give it a Java Naming and Directory Interface (JNDI) name, just as you attach a JNDI name to an EJB component. This JNDI name attached to the work manager is available in the global namespace. When you want your Web module to perform an action, you create an implementation of the Work interface and submit that instance to the work manager. The work manager daemon creates another thread, which invokes the run method of your Work implementation. Hence, using a thread pool the work manager can create threads for as many Work implementations submitted to it. Not only that, the work manager takes a snapshot of the current Java EE context on the thread when the work is submitted. We can consider this as a WorkWithExcecutionContext object.

In Figure 1 below, we show the background scenario. When a module submits a task to the work manager, a corresponding context is created by inheriting all the contexts of the caller module.

Figure 1. Context-based execution

As an example, let's assume I have a startup servlet AServlet in module A, which is part of my ear file. Suppose the startup servlet in this module uses three jar files: log4j.jar, concurrent.jar, and moduleAspecifc.jar. When we call the work manager from module A's AServlet, the work manager retrieves the classpath entries log4j.jar, concurrent.jar, and moduleAspecific.jar in the execution context of the global work manager thread. If you have many different modules, say, module B and module C, tell the work manager to execute your Work implementations; then all your modules will execute in parallel, without waiting for the completion of each.

Figure 2. Each module can be executed in parallel with a work manager. Click on thumbnail to view full-sized image.

A new concept based on the work manager, named asynchronous beans, can be found in WebSphere 5.0 and later versions. An asynchronous bean is a Java object or EJB that can be executed asynchronously by a Java EE application by using the Java EE context of its creator. IBM has revamped the work manager so that it now is considered a thread pool. This new concept, along with the use of Java's concurrent utilities, forms the basis for Java Specification Request 237 (Work Manager for Application Servers), which most likely will be incorporated into Java EE 6. The details and downloads are available in Resources.

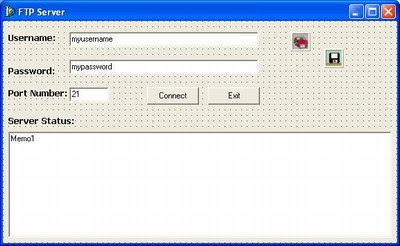

To illustrate how the manager works, we will use the following three classes from asynchbeans.jar, available within the lib directory of your WebSphere installation: Work, WorkManager, and WorkItem. You can start by creating a work manager, using WebSphere's administrative console.

Figure 3. Use the application server console for creating a work manager. Click on thumbnail to view full-sized image.

As shown in Figure 3, you can click on the Work Managers link on the left to create a work manager of your own. For our purposes here, however, we use the DefaultWorkManager, shipped with every WebSphere Application Server 5.x or 6.x. Please note the global JNDI name "wm/default" for this work manager. As explained before, this JNDI name is available across all the applications of your ear file, as it is a global namespace.

The solution

For the code that follows, you need one or all of the following three imports:

import com.ibm.websphere.asynchbeans.Work;

import com.ibm.websphere.asynchbeans.WorkItem;

import com.ibm.websphere.asynchbeans.WorkManager;

To improve the performance of our startup servlets, let's begin by writing a CommonServiceLocator, which will have one method named getWorkManager(), as shown below:

public static WorkManager getWorkManager()

{

//workManager is a static variable

if(workManager == null) {

try {

InitialContext ctx = new InitialContext();

String jndiName = "java:comp/env/wm/default";

workManager = (WorkManager)ctx.lookup(jndiName);

System.out.println("WorkManager obtained");

} catch(Exception ex) {

OscarLogger.logException("", ex);

System.out.println("Unable to lookup workmanager: " + ex.getMessage());

}

}

return workManager;

}

Let's assume we can invoke the above method anywhere in any module (provided we have put the above class in the application server's classpath):

WorkManager wmger = CommonServiceLocator.getWorkManager();

Being a normal "resource," a work manager also needs to be registered in web.xml if you are trying to access it from the Web module:

It's time to complete our StartupServlet:

public class StartupServlet extends HttpServlet

{

public void init()

{

try

{

WorkManager wm = CommonServiceLocator.getWorkManager();

wm.startWork(new StartupWork(configFileNames));

// parameter configFileNames is optional.

// You may want to pass some XML or configuration files here

}

catch(Exception e)

{

e.printStackTrace();

}

}

}

Here, we have created our WorkManager instance with the help of the service locator we wrote earlier. Then we just call the startWork() method to initiate the action, which is written within the StartupWork class, explained below. Note that the thread won't wait for the action to finish. It's asynchronous. If you have, say, 10 Web modules, then have 10 different startup servlets with corresponding action classes, when the application server starts it will invoke the init methods of all the servlets, without waiting for them to complete.

Quickly add StartupServlet to web.xml and give an appropriate value for load-on-startup so that StartupServlet's init method is called during the application server's startup process.

As you can see in the above snippet, we need to define a StartupWork class. Please remember to implement the Work interface we have been talking about:

class StartupWork implements Work

{

private String configFileNames;

public StartupWork(String configFileNames)

{

this.configFileNames = configFileNames;

}

public void run()

{

try{

//Please do your time-consuming actions here such as

//loading and instantiating classes from, say, config file,

//connecting to database and caching lots of data,

//some complex I/O operations,

//reading and storing an XML DOM,

// doing some asynchronous logging or audit trialing, etc.

} catch(Exception e) {

e.printStackTrace();

}

}

public synchronized void release()

{

// Release and cleanup here

}

}

As you can see, the Work interface is just an inherited interface from the java.lang.Runnable interface. You need to implement two methods, run() and release(). In the run() method, you do all your heavy work; release() can be used for some cleanup actions if needed. Note that the release() method should never be called directly from the application. It is usually called at run-time, when, for example, the Java Virtual Machine is shutting down. Also note that the above code could easily be extended for incorporating logging or audit-trailing, which usually may require writing large amounts of data in a particular format to be written to files.

Finally, we would like to conclude by introducing one more class named WorkItem. Note that in the above code snippets, we do not capture the startWork() method's return value. Actually, startWork() returns an instance of WorkItem, through which you can capture return values of your actions. Further, many WorkItems can be combined to tell the work manager to wait until the submitted works are completed.

For example, assume we have a Work implementation called SampleWork, whose run method fires messages to a back-end mainframe system, and that we have three such messages to be fired. Typically, we used to fire one by one, so that the overall time would be the sum of the individual times taken for each message. Using WorkItem, the approach could be:

//Code as before to get Work Manager

//Create three Work Items

WorkItem witem1 = wm.startWork(message1params);

WorkItem witem2 = wm.startWork(message2params);

WorkItem witem3 = wm.startWork(message3params);

//Create an ArrayList

List items = new ArrayList();

//Add the previous WorkItems to ArrayList

items.add(witem1);

items.add(witem2);

items.add(witem3);

//Join them using WorkManager wm

wm.join(items, WorkManager.JOIN_AND,(int)WorkManager.INDEFINITE);

In the above code, we use the return values and join them until all of them complete. You may specify WorkManager.JOIN_OR to make sure the return occurs after one of the threads completes. Obviously, the above approach would reduce the execution time significantly when you have many such tasks to be joined.

Conclusion

This article examined the possibilities for high-performance Java EE applications using context-scoped threads created by the application server with the Work Manager API. Developers can use these high-performance threads to enhance the speed of startups, logging, audit-trailing and time-consuming method invocations without sacrificing core Java EE principles.